TikTok becomes information battleground in Ukraine

On Tuesday, a man with the moniker “Ukraine solider” [sic] posted a live video on TikTok shot from a window showing blocks of flats in the blazing sun, sirens wailing in the background. Speaking in English, he thanked tens of thousands of followers for sending virtual gifts that can be converted into cash.

But in reality, it was overcast and snowing in Kyiv that day and the sounds reportedly did not match sirens heard in the city.

After the Financial Times flagged the account to TikTok, the Chinese video app removed the user — but not before the stream amassed more than 20,000 views and more than 3,600 gifts were sent, costing well-wishers around £50 in total.

The ByteDance-owned app with more than 1bn users is best known for viral dance videos but has recently turned into a news source for many young people watching Russia’s invasion of Ukraine through their phones. Ukrainian accounts have shared their experience, galvanising support and aid for their country.

But the conflict has also exacerbated shortcomings in TikTok’s moderation controls which have enabled a proliferation of scam accounts posting fake content to attract followers and money, according to experts.

“There was an initial information vacuum that was especially vulnerable to being filled by inaccurate footage,” said Abbie Richards, a TikTok misinformation researcher.

In most cases, this consisted of users inadvertently posting misleading or inaccurate footage, Richards said. But in other instances, it has led to “people posting misleading content for likes, follows and views. Then there are people who are grifting, particularly when it comes to livestreams where donations are really easy.”

Researchers highlight financial incentives on TikTok, designed to reward creators, as having unintended consequences. Creators can receive virtual gifts — such as digital roses and pandas — during livestreams and convert them into Diamonds, a TikTok currency, which can then be withdrawn as real money. TikTok takes a 50 per cent commission on the money spent on virtual gifts, according to creators on the platform. The company said it does not disclose the financial breakdown of video gifts and the monetary value of Diamonds is based on “various factors.”

The FT found several livestreams about the Ukraine war that included misinformation, which had received thousands of virtual gifts and views. One video showed a destroyed building with mournful music, which the FT traced back to stock footage from a photographer in Latvia. TikTok removed multiple videos and accounts flagged by the FT for dangerous misinformation, illegal activity, regulated goods and authenticity violations.

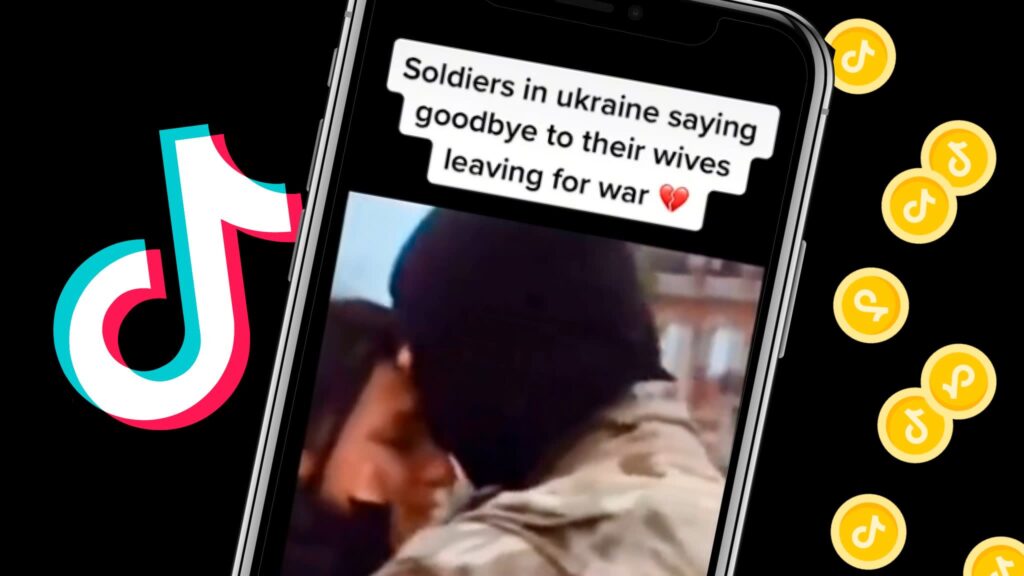

One of the top-ranked posts, when you search the hashtag Ukraine on the platform, is a video of soldiers in military fatigues saying emotional goodbyes to women. It has been watched 7.3mn times but is actually a scene from a 2017 Ukrainian film The War of Chimeras.

TikTok became the world’s fastest-growing social media company in 2020, as users flocked to the platform in the pandemic. But its huge growth has raised questions about the short-form video app’s moderation capabilities, as the company catches up with, and tries to learn from, its older social media rivals.

In order to address this problem, it has been ramping up its team of moderators in Europe whose members manually review the platform’s most violent and disturbing content, and it also uses artificial intelligence technology to flag offending material.

Bret Schafer, head of the information manipulation team at the Alliance for Securing Democracy, said TikTok is in the “nascent stages of its content moderation policies at scale around a conflict like this.”

“It has a more opaque system than Facebook or Twitter so it’s hard to know how effectively it [enforces policies]. But publicly its messaging has been aligned with Silicon Valley,” he added.

Social media companies are under increased scrutiny in the west for perceived failures in not removing inaccurate content and Russian state propaganda during the escalation. Earlier this week, Meta-owned Facebook, Google’s YouTube and TikTok agreed to take down Russian state-backed media outlets Russia Today and Sputnik in the EU following requests by the bloc.

Experts argue that TikTok’s algorithm enables the spread of viral misinformation more than its peers. They have also noted that the platform’s editing tools allow users to easily repurpose or mix audio and visual content from varied sources.

On Monday, Russia’s communications regulator complained that there was anti-Russia content related to its “special military operation” in Ukraine on TikTok and demanded the company stop recommending military content to minors.

“The algorithm is currently aggressively promoting Ukraine-related content, irrespective of whether it’s true or not,” said Imran Ahmed, chief executive of the Centre for Countering Digital Hate, a campaign group that monitors hate speech on the internet. He added that TikTok does not provide enough data for users to verify content themselves.

On Friday, TikTok announced it would apply labels to content from some state-controlled media and add warning prompts to some videos and livestreams.

On Sunday, TikTok said it would suspend live-streaming and the posting of new content in Russia in response to the country’s recently implemented “fake news” law.

In a blog post, the company said it would continue to “review the safety implications of this law”. TikTok’s in-app messaging service will not be affected, it added.

TikTok’s flat-footed response stands in marked contrast to how it reacts quickly to erase political content that risks upsetting Beijing. The company removed videos of the Hong Kong pro-democracy protests in the summer of 2019, according to the Information. TikTok has also blocked users from using the words “labour camp” and “re-education centres” in subtitles, in order to prevent discussion of China’s treatment of Uyghur minorities.

Michael Norris, senior research analyst at Shanghai-based consultancy AgencyChina, said the deluge of fake videos on TikTok showed there was “little sense of urgency” among ByteDance officials to tackle the situation.

TikTok said the safety of its community is a top priority and it is closely monitoring the situation with increased resources. “We take action on content or behaviour that threatens the safety of our platform, including removing content that contains harmful misinformation,” a spokesman added.