A global AI bill of rights is desperately needed

The writer is a science commentator

It is becoming increasingly hard to spot evidence of human judgment in the wild. Automated decision-making can now influence recruitment, mortgage approvals and prison sentencing.

The rise of the machine, however, has been accompanied by growing evidence of algorithmic bias. Algorithms, trained on real-world data sets, can mirror the bias baked into the human deliberations they usurp. The effect has been to magnify rather than reduce discrimination, with women being sidelined for jobs as computer programmers and black patients being de-prioritised for kidney transplants.

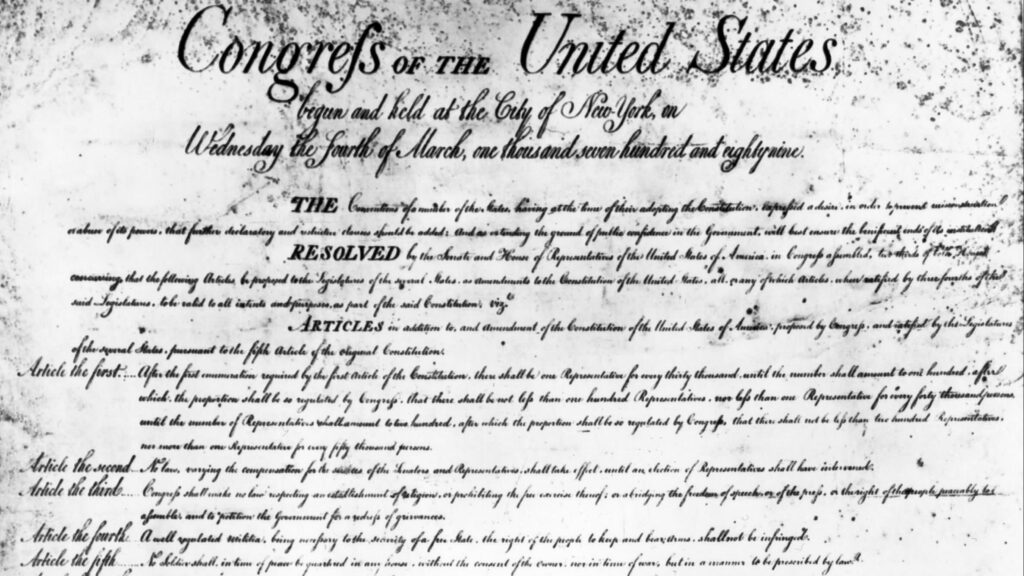

Now White House science advisers are proposing a Bill of Artificial Intelligence Rights, emulating the US Bill of Rights adopted in 1791. That bill, intended as a check on government power, enshrined such concepts as freedom of expression and the right to a fair trial. “In the 21st century, we need a ‘bill of rights’ to guard against the powerful technologies we have created . . . it’s unacceptable to create AI that harms many people, just as it’s unacceptable to create pharmaceuticals and other products — whether cars, children’s toys or medical devices — that will harm many people,” write Eric Lander, Biden’s chief science adviser, and Alondra Nelson, deputy director of science and society in the White House Office of Science and Technology Policy, in Wired.

A new bill could ensure, for example, a person’s right to know if and how AI is making decisions about them; freedom from algorithms that replicate biased real world decision-making; and, importantly, the right to challenge unfair AI decisions.

Lander and Nelson are now canvassing views from industry, politics, civic organisations and private citizens on biometric technology, such as facial recognition and voice analysis, as a first step. Any bill might be accompanied by governments refusing to buy software or technology from companies that have not addressed these shortcomings.

This pro-citizen approach is in striking contrast to that adopted in the UK, which sees light-touch regulation in the data industry as a potential Brexit dividend. The UK government has even raised the prospect of removing or diluting Article 22 of GDPR regulations, which accords people the right to a human review of AI decisions. Last month, ministers launched a 10-week public consultation on its plans to create an “ambitious, pro-growth and innovation-friendly data protection regime”.

Article 22 was recently invoked in two legal challenges brought by drivers for ride-hailing apps. The riders, for Uber and the Indian company Ola, claimed they were subject to unjust automated decisions, including financial penalties, based on data collected by the companies. Both companies were ordered to give drivers more access to their data, an important decision for workers in the heavily automated gig economy.

Shauna Concannon, an AI ethics researcher at Cambridge university, is broadly supportive of the bill that Lander and Nelson propose. She argues that citizens have a fundamental human right to challenge flawed AI decisions: “People sometimes think algorithms are superhuman and, yes, they can process information faster, but we now know they are incredibly fallible.”

The trouble with algorithmic decision-making is that the technology has come first, with the due diligence an afterthought. The rise of “explainable AI”, a field of machine learning which attempts to dissect what goes on in those black boxes, is a belated corrective. But it is not sufficient, given the known harms being done in society. Technology companies wield the kind of power once only enjoyed by governments, and for private profit rather than public good. For that reason, a global Bill of AI Rights cannot come soon enough.